+ Exploratory Log

Tried logging without a pre-planned format, produced a log that's nice to read but lacks detail. I'll try some formal log formats in my next project.

START

-Tried to call API via browser, received "not authorized" and was asked for credentials. Searched through the source code and found that the parameters -p and -e (which are required when starting the OCC service) specify your password and username respectively. Gave those values as my credentials, and was successfully validated. Apparently the executable also suports a --help parameter, which would've told me about that (and the other parameters).

-Testing the /v1/user route, attempting to reproduce the inc. It's is supposed to count the number of api calls a user has made total, per day, and per minute. I am attempting to reproduce the issue with the counters that I saw when using Palantir's browser frontend to access their instance of OCC.

ISSUE 1: Calling /v1/user, the calls-counter per-day sometimes resets along with the per-minute counter. Haven't been able to pin down a pattern, seems to happen more often when the service has just started, or when the calls-per-day counter is low.

FOLLOWUP ISSUE 1: After searching through the source code, it appears this happens because of the following line in ApiServer.java:

scheduler.scheduleAtFixedRate(ApiUtils.resetDayHits, 1, 14400, TimeUnit.MINUTES);

The second argument, 1, means that resetDayHits will fire 1 minute after the OCC service starts up before it goes into its daily schedule. So, any API calls in that first minute will be lost from the daily counter. That parameter should be changed to either 0 or 14400.

-Retrieved example DAGs in various formats, using requests like:

http://127.0.1.1:8080/v1/documents/1?format=JSON

Which returns the download URL for the specified DAG:

http://127.0.1.1:8080/v1/documents/1/JSON/uWq1l5PSvRc4

ISSUE 2: The download URL doesn't require authentication. This is inconsisitent with the requirement for authentication to see the list of DAGs, and to retrieve the access link for a particular DAG.

CLOSED ISSUE 2: According to documentation, this is correct behaviour.

ISSUE 3: The key at the end of the download link (ie. uWq1l5PSvRc4) can be modified to slightly change the DAG which is recieved. Nodes are relabelled randomly, and edges and node contents are modified to fit the new labels. For example, node 0 with contents "AAA" and edge [0, 2] might become node 1 with contents "AAA" and edge [1, 2]. Happens with both replacement and newly-created DAGs.

-Uploaded a new DAG, which was a copy of the example JSON DAG provided in Palantir's source. Needed paramters "format JSON" and "file [file]".

-Replaced the contents of a DAG (using POST /v1/documents/[id])

-Redownloaded the uploaded DAGs.

FOLLOWUP ISSUE 3: May not be related to changes in the key. Repeated calls to the same download URL without modifying the key also results in randomly relabeled nodes.

-Deleted single DAG

-Uploaded new DAG without specifying an ID number

ISSUE 4: New DAG was not assigned the ID number of the previously deleted one.

CLOSED ISSUE 4: Not necessarily a bug. The problem is that eventually ID numbers become unmanagably high, but that won't hapen for a long time. Tracking and reusing deleted ID's would be more costly.

-Deleted all DAGs

-Uploaded new DAGs. Interesting note, ID-numbering did restart from 1 after deleting all, which is good.

END

+ Regression Testplan

I created this testplan to cover a matrix of OCC's endpoints and the requests they support. A simple spreadsheet worked nicely, but it's best viewed in it's own page.

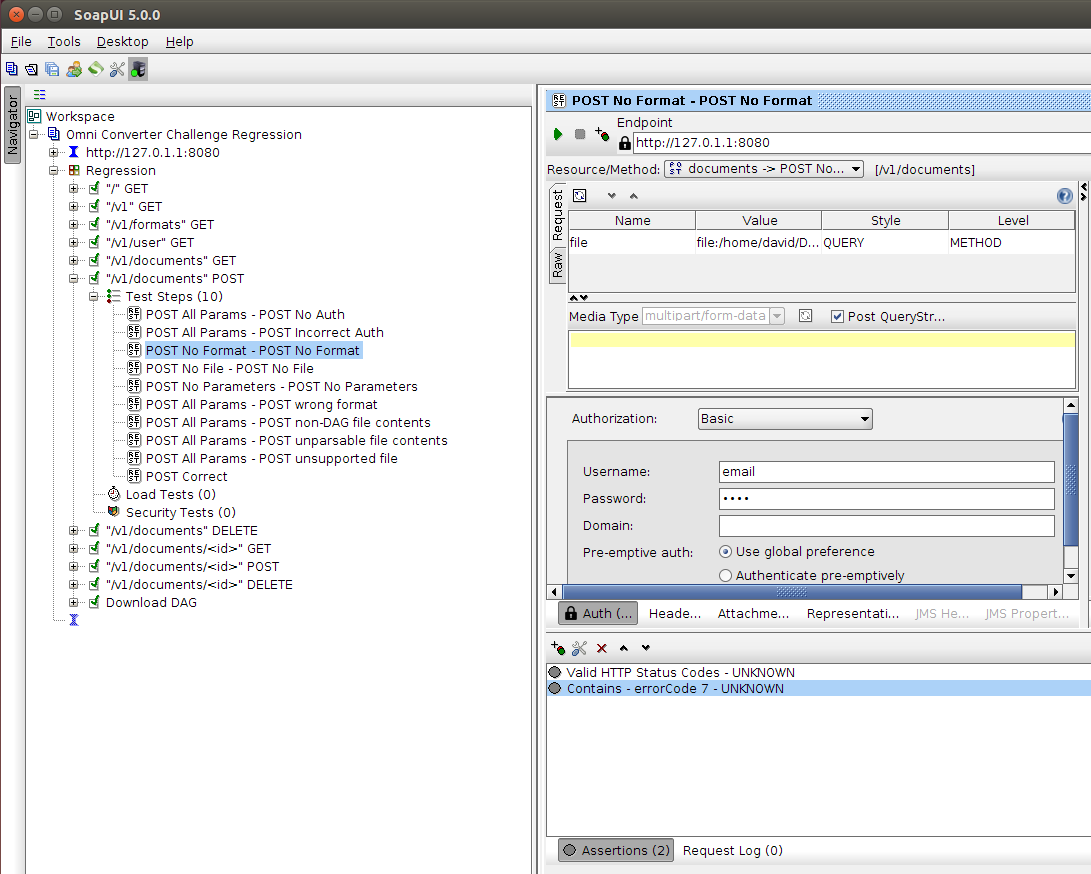

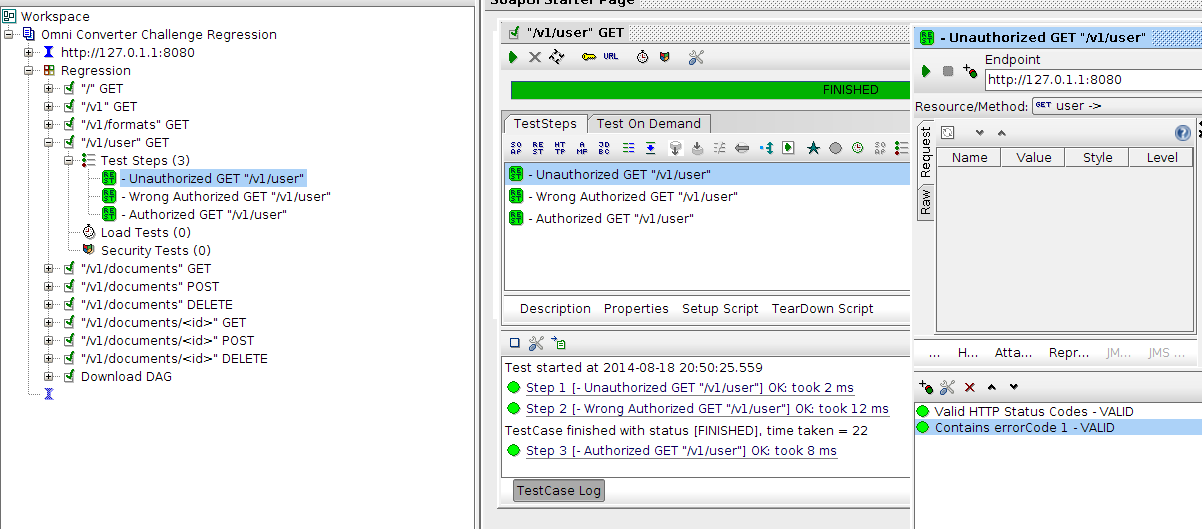

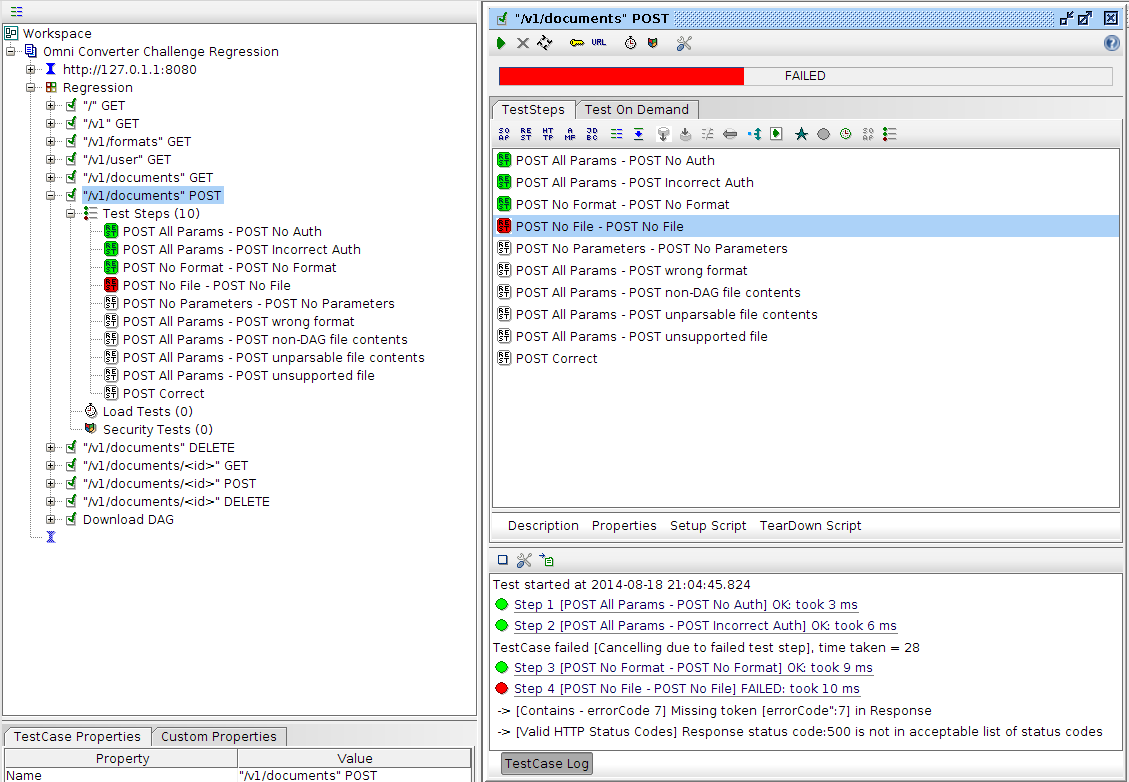

+ SoapUI Test Suite

Automating the regression test plan in SoapUI was effective, but I'm still thinking about how best to show it off.

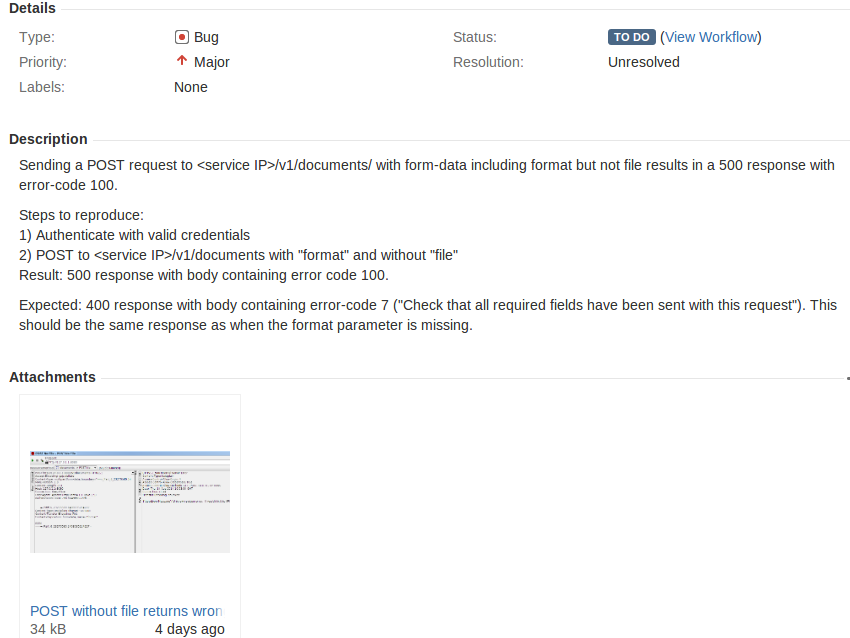

+ Bug Reports

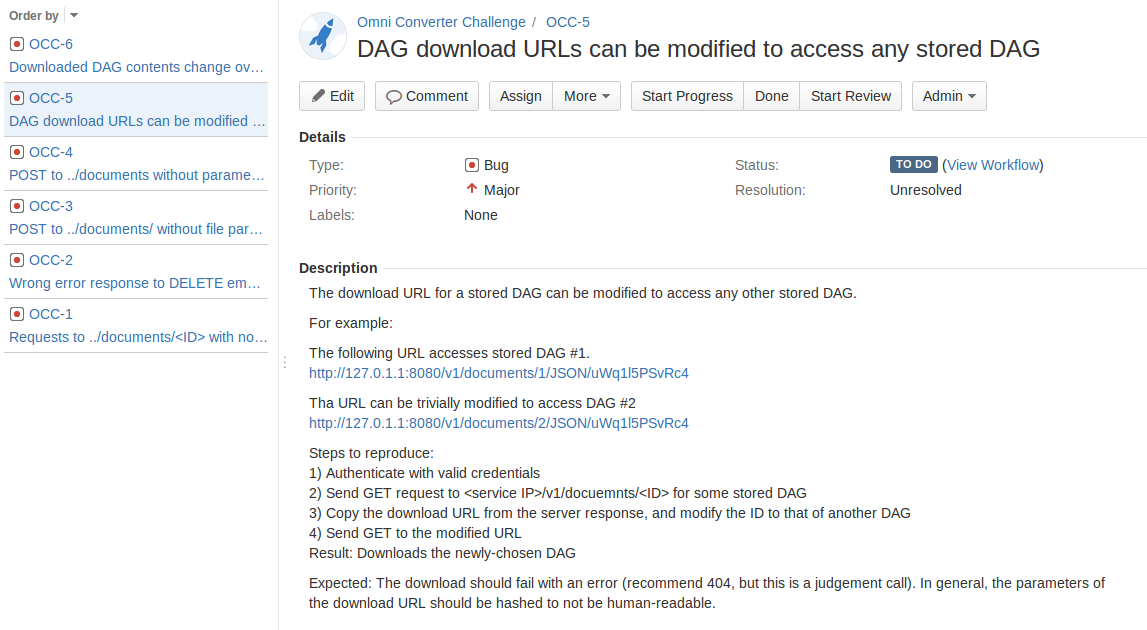

Bare-bones reports of the bugs found during exploratory and regression testing, entered into JIRA. Planning to use a more detailed bug template for the next project.

+ Retrospective

These are the major issues I noticed throughout the project, and the solutions I plan to use.

1) The project took too long

I was frequently distracted by my hobbies. I enjoy pushing towards goals, so setting daily project goals should help keep me working.

2) I've done this sort of testing before

Using technology I'm familiar with was probably good for the first project, since I was already busy establishing a work environment adn process. Now that I have a foothold, I'll move on to new technology.

3) My exploratory logs lack useful detail

I tried writing them freehand, which allowed me to skip details at random. I should adopt a formal exploratory log format to stop myself from cutting corners.

4) I was unsure which details to include in my bug reports

The details that should and shouldn't be included are determined by the reports' audience, and I didn't have an audience. For future projects, I will imagine the target software's developer and create a bug report template appropriate to that developer.